Support vector machine

- Types of Support Vector Machines

- Working on Support Vector Machine

- Support Vector Machine Terminology

- Mathematical Intuition of Support Vector Machine

- Popular Kernel Functions in SVM

- Advantages of Support Vector Machine

- Disadvantages of Support Vector Machine

Support Vector Machine algorithms (SVMs) are a notable class of supervised learning models and techniques for

regression and classification. Fundamentally, support vector machines (SVMs)

are discriminative classifiers; they identify the optimal hyperplane through feature

space optimization. This hyperplane is used to distinguish different groups of

data points. Regression analysis, outlier detection, and a range of

classification issues, both linear and nonlinear, can all be handled using

support vector machines (SVMs), which are effective and incredibly adaptable.

These methods find

applications in numerous fields, including handwriting identification, spam

detection, text categorization, gene classification, and many more. Support

vector machines (SVMs) are highly effective at handling multidimensional data

with nonlinear correlations.

The primary goal of support vector machines (SVMs) is to locate the feature space hyperplane that maximizes the margin between the nearest data points from various classes. The hyperplane's dimensionality is proportional to the dataset's feature count. A hyperplane, for instance, is only a straight line in the case of two features. It becomes a plane once three properties are added. As the number of qualities increases, the hyperplane fills a higher-dimensional space, making it more challenging to comprehend and portray. SVM machine learning Python is very easy to understand therefore we study with the help of Python in this blog. In this model, we learn about the SVM algorithm and explain the support vector machine.

Example of Support Vector Machine

Let’s suppose we are farmers

and we are sorting grains. Normally we would use a sieve, for sorting the

grains, but what if the grains are oddly shaped or similar in size? In such

type of situation, a Support Vector Machine (SVM) can be used or acts like a

smart sorting machine.

SVM machine learning excels in classification-like

tasks, like separating the wheat from the chaff (that bad vs good grains). It

can also analyze the data points (wheat grains) based on their features like

size, color, weight, etc. Let’s take grain size and texture as a feature for

our case.

With the help of the SVM algorithm in machine learning, we

create a separation line or hyperplane, in this feature space. This hyperplane

or separation line strategically maximizes the distance between the two

categories, wheat and chaff. Grains (data points) on one side of the line are

classified as wheat, and points which are on the other side of the line are classified

as chaff.

The key advantage of SVM model machine learning is finding the best separation line, even if the data isn’t perfectly linear. Let's

suppose we have some grains that are oddly shaped and categorized as outliers.

SVM can handle these by finding the best possible hyperplane even with such

complexities.

Once the SVM is trained

on the labeled data (wheat and chaff samples), then the SVM can classify new

grains (data points) that it still doesn’t see. The SVM analyzes the features

of these new grains and then assigns them into one class based on their

position relative to the hyperplane.

Types of Support Vector Machines

We can divide the Support

Vector Machines into 2 categories:

Linear Support Vector

Machines (SVM) use datasets with linear separability, meaning that classes can

be effectively discriminated using a single straight line. This is the typical

result when the data points are neatly divided into two classes by a linear

border. If this is the case, we build the most efficient decision border

between the classes using linear support vector machine classifiers.

In contrast, non-linear support vector machines (SVM) are used when a straight line cannot be used to separate the non-linear data. Because of the way the data points are arranged, a more complex, non-linear decision boundary could be needed in certain situations to split the classes appropriately. Such non-linear data is handled by a Non-linear Support Vector Machine (SVM) classifier. These classifiers may handle data with non-linear correlations by converting the data into a higher-dimensional space where linear separation is possible using techniques like kernel functions.

How does Support Vector Machine

work?

Linear SVM - One of the reasonable choices for the best hyperplane is the one that has the largest separation or margin between the two classes.

In the image above, when

there is just one blue dot in the red area, how do support vector machines

(SVMs) operate? To handle this kind of data, the SVM establishes the maximum

margin in the same way that it did for the prior data set. It then has to add a

penalty each time a point goes over the margin. These kinds of circumstances

are referred to as "soft margins".

Whenever the soft margin appears in the dataset, the SVM applies the

formula (1/margin+Λ(∑Penalty)) in an attempt to reduce it. Hinge loss is mostly

used to apply the penalty. If there is no violation, the loss is directly

correlated with the violation's distance.

Non-linear SVM- Till now we have looked only the linear SVM or

linearly separable data, now we look at datasets that are not linearly

separable. This type of data cannot be separated by a single straight line.

Below is the image of such a type dataset.

By looking at the above diagram we can conclude that we cannot clearly

separate the data points using a single line. Also if we have more than two

classes it is impossible to separate them using a single straight line. In the above diagram, we can see that a single line cannot separate them but a circular

hyperplane can separate the two classes. Therefore, we can add another

coordinate Z, which gets help from X and Y in which Z = X2 + Y2.

By adding a third dimension the graph can change into: -

Because the above diagram tries to show 3-D space it looks like a parallel to the x-axis separates them. If we convert it into 2D space where Z = 1, then it can look like the below image:

In the above diagram we

can see that the dataset is separated with the help of Z coordinate where Z =

1, therefore, we get a circumference of radius 1 in the above case.

Support Vector Machine

Terminology

A hyperplane serves as

the decision boundary and partitions the feature space into multiple classes in

Support Vector Machines (SVM). For linearly separable datasets, the hyperplane

is represented by a linear equation, which is typically expressed as wx + b =

0. The variables 'w', 'x', and 'b' in this equation represent the weights,

input characteristics, and bias term, respectively.

Support vectors or the

data points closest to the hyperplane, are essential for determining the

hyperplane and margin. The margin is the length of the support vector machines that separates them from the hyperplane. Optimizing the margin is a

crucial goal of support vector machines (SVM) since a larger margin typically

leads to better classification performance.

Support vector machines

(SVMs) convert the input data points into a feature space with additional

dimensions by using mathematical functions known as kernel functions. It is

feasible to discover nonlinear decision boundaries with this adjustment even in

cases where the data points aren't linearly separable in their original input

space. It is often preferable to use kernels that use sigmoid, linear,

polynomial, or radial basis functions (RBFs).

Support vector machines

employ two types of margins: firm and soft. A hard margin hyperplane flawlessly

separates the data points of various categories without misclassifications, in

contrast to a soft margin hyperplane, which allows for certain violations or

misclassifications—usually added to deal with outliers or incomplete

separability.

In SVM, the

regularization value 'C' guarantees a balance between margin optimization and

misclassification penalty minimization. A larger 'C' value translates into a

harsher penalty, which implies fewer misclassifications and a narrower margin.

Hinge loss is a popular

loss function in support vector machines (SVMs) that is used to penalize margin

violations or overclassification. SVM objective functions often include a hinge

loss component along with a regularization term.

The dual problem in support vector machines (SVM) is to optimize the Lagrange multipliers associated with the support vectors. This formulation allows the use of kernel methods, resulting in more efficient computation, especially in high-dimensional environments.

Mathematical intuition of

Support Vector Machine

Assume we are given a binary classification task with two labels: +1 and

-1. The training dataset has X features and Y labels. For this reason, the

linear hyperplane's equation can be written as:

The vector W in this case denotes the normal of

the hyperplane. The axis that crosses the hyperplane is this one. In addition

to the normal vector w, the hyperplane's offset from the origin is

represented by the equation's parameter b.

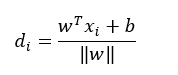

The distance between a

data point let x_i and the decision boundary can be calculated as:

Here ||w|| is the

Euclidean norm of the weight vector w.

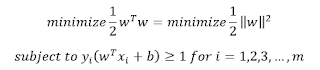

For Linear SVM

classifier:

Optimization

For hard margin linear

SVM classifier:

For soft margin linear

SVM classifier:

Dual Problem: To solve problems with support vector machines (SVMs), one must first determine which Lagrange multipliers correspond to which support vectors. We refer to this optimization issue as a dual problem. The optimal Lagrange multipliers α(i) optimize this dual objective function:

In above equation

- α_i (alpha subscript i)is the Lagrange multiplier which is associated with the ith training sample.

- K(x_i x_j ) (x subscript i and j) is the kernel function that computes the similarity between two samples x_i and x_j. They allow the SVM to handle nonlinear classification problems by implicitly mapping the samples with the higher-dimensional feature space.

- ∑α_i represents the sum of all the Lagrange multipliers

Using the support of the vectors and the optimal

Lagrange multipliers, the decision boundary of the support vector machines may

be characterized once the dual issue has been solved. The decision boundary is

provided by the support vectors, which are those in the training set with an i

higher than 0:

Popular kernel functions in SVM

By converting low-dimensional input space into higher-dimensional space, support vector machines' kernels enable them to solve separable problems that were previously thought to be non-separable. It is quite helpful for problems involving non-linear separation. All we need to do is introduce the kernel and figure out how to partition the data according to the labels or outputs it defines. The kernel does extremely complex data manipulations:

Advantages of Support Vector

Machine

- It is effective with high-dimensional data or cases.

- Because it uses a decision function known as support vectors memory is efficient for the training of subsets.

- We can use different kernel functions for the

decision functions and it is also possible to specify the custom kernels.

Disadvantages of Support Vector Machine

- Big data sets aren't a good fit for the Support Vector Machine method.

- When dealing with large datasets, the Support Vector Machine technique doesn't work well.

- Support Vector Machine is ineffective when applied to a very big dataset.