Machine Learning

- Definition

- History

- Types

- Lifecycle

- Real-World Example

- Main challenges

When we think about

machine learning we may think of situations like those described in The

Terminator movie in which machine starts to think on their own like humans and

start a war against humanity, but things like this are still far away from reality

because till now machines can only do one task at a time with precision even

when they are trained on similar data or situation for a long time or with many

data. Therefore, when we think about machine learning operations we think that machines

can think or be able to learn by themselves which is not fully true. Machine

learning is a subfield of artificial intelligence we can say that artificial is

a superset then machine learning is only a part or subset of artificial intelligence but they are both often discussed together. And many times artificial intelligence and machine learning are used interchangeably It allows the systems or computers to

automatically learn from already available data. To do this machine learning

uses various algorithms and mathematical formulas. In this, we will look at

what is machine learning and its types and learn about them, in other words, we learn machine learning basics or we can say it is a machine learning for beginners blog.

First, we need to know what is machine learning.

AI and Machine learning is an area of study in computer science that allows computers the capability to which the computers can learn without being heavily programmed before doing some task. It tries to make computers act like or behave similarly to humans which is the ability to learn. It is very extensively used in today’s world. The term machine learning has been available since around 1959 when it was first used by Arthur Samuel.

Machine learning is the

science or we also can say the art of programming computers which makes them learn

from data. The more general definition would be: that machine learning is the

field of study that gives computers the ability to learn without being

explicitly programmed (Arthur Samuel; 1959).

For a more engineering-oriented definition, we can say that: - A computer

program is said to learn from experience E concerning some task T

and some performance measure P, if its performance on T, as

measured by P, improves with experience E (Tom

Mitchell, 1977).

Machine Learning Examples

Let’s look at a real-world example to better understand machine learning. There is a

megacity, where there is a hospital in which a nurse Sarah is working, and she finds

herself grappling with a common healthcare challenge: patient readmissions. Despite

the hospital’s best efforts to provide quality care, some patients returned

shortly after discharge, mainly due to complications that could have been

prevented with timely interventions.

One Sarah attended a workshop on machine learning

applications in healthcare. Excited by the potential of this technology, she

started a journey to explore how it could help to reduce the readmissions at

her hospital.

Sarah arrived at the hospital's data science

department with a can-do attitude, determined to use machine learning

algorithms to crunch reams of patient data. The data science department and

Sarah examined factors like patient’s medical histories, treatment plans,

demographics, and post-discharge follow-up procedures.

As they went deeper into the data, patterns

started to emerge. The machine learning algorithms or machine learning system design identify several key

predictors of readmission, including the patient’s age, previous

hospitalizations, chronic conditions, and adherence to medication regimens.

Moreover, they find a nuanced relationship between these variables, allowing

for a more comprehensive understanding of readmission risk factors.

With these insights, Sarah and the data science

team devised a proactive strategy to prevent readmissions. They implemented

personalized care plans tailored to each patient’s unique needs, leveraging

machine learning algorithms to predict and mitigate potential risks. For

instance, high-risk patients received additional post-discharged support such

as home visits from healthcare professionals, remote monitoring devices, and

regular check-in calls.

How does machine learning work?

Mainly machine learning

models try to predict the results, for doing prediction it first trains on

previous data and learns from it then tries to predict the new outcome for

unseen data. The more the amount of data the better the model predicts

generally, that is the models’ accuracy increases. By hard coding the problem

we might face many challenges like it is a very complex task and also if we

need to update the code then we need to go through all the written code for

updating it which is costly and time-consuming. In machine learning without

writing the code, we just need to provide the data into the model’s algorithms,

which automatically able to build the logic based on the data and also able to

predict the output. With this process, we can easily update the model if we

have new data when we need to update the model.

Why we should use machine learning?

We should use machine

learning because it reduces the time and cost of tasks that previously took

many lines of code and lots of effort. For example, now our email filters can

filter ham and spam mail without our guidance which is possible because of machine

learning if we try to do this task using the traditional coding approach it

would take lots of time and hard coding even after that if the new type of spam

arrived we need to update the code manually which is a very difficult task and

therefore if we use machine learning we can do this much easily and also for a

new type of spam we need to just train our model with a new type of data which

have these type of spam mails. This is only one example of why we should use

machine learning there are countless options or applications available where we

use machine learning or we might be able to use them in the future. Below are

some key points that show the importance of Machine learning: -

- Instant increment in the production of

data.

- Solving complex problems, which are

difficult for humans like finding patterns in a dataset which have 1000

features.

- Ability to decide on many sectors like

finance, fraud, and anomaly detection.

- Finding hidden patterns and extracting

useful information from data.

Examples of

machine learning applications

- An example of anomaly detection is the examination of product images on a production line to automatically classify them and identify defective products. This process may also include image classification techniques to determine product type or potential defects.

- Detecting tumors in brain scans. It is an example of semantic segmentation in which every pixel of an image (or perhaps a medical image) is classified.

- Automatically classify new articles. It is a natural language process (NLP), in which it can further be classified as a text classifier it can use recurrent neural networks or transformers.

- With the help of machine learning we can automatically detect offensive comments in forums which is achieved by text classification techniques, often using natural language processing (NLP) tools. In this process, we analyze comments to determine if they have offensive language or inappropriate content.

- Automatic summarization of long documents is a natural language processing (NLP) feature that involves compressing large texts into shorter, more concise versions. This process, called text summarization, aims to extract important information from a document while preserving its main points.

- Building a personal assistant or chatbot. It involves several NLP components, such as question-answering modules and natural language understanding (NLU).

- Predicting our company's profit for the next year is also based on various performance metrics achieved by the ML model this type of ML is predictive analytics. In this we need to use multiple regression algorithms, such as linear regression and polynomial regression models, to analyze historical data and predict future revenue trends.

- Developing an application that responds to voice commands requires the introduction of speech recognition technology. This process involves analyzing sound samples to interpret spoken commands. Due to the complexity and long duration of sound sequences, speech recognition is mostly based on deep learning models such as RNNs, CNNs, or transformers.

- Detecting credit card fraud can also done via machine learning methods. It is an example of anomaly detection in which we try to detect uneven patterns in someone’s credit card transaction

Features of Machine Learning

- It can learn from past data and improve

its performance accordingly.

- It can detect many or different patterns

from data.

- It is data-driven technology.

- It is very much similar to data mining

because it can deal with huge amounts of data.

Types of Machine Learning

There are many different classifications

of machine learning but at the broader level we can classify them into four

types:

·

Supervised learning

·

Unsupervised learning

·

Reinforcement learning

·

Semi-supervised learning

Supervised learning

In this, the training set

we feed to the algorithm has solutions these solutions are also called labels. We can

also say that in supervised learning, labeled datasets are given to the machine

learning system for training, and after the training, the system can predict

the results or outcomes.

|

Gender |

Age |

Weight |

Label |

|

M |

47 |

68 |

Sick |

|

M |

68 |

70 |

Sick |

|

F |

58 |

56 |

Healthy |

|

M |

49 |

67 |

Sick |

|

F |

32 |

60 |

Healthy |

|

M |

34 |

65 |

Healthy |

|

M |

21 |

74 |

healthy |

In above table contains

the patient’s information that also has a labels data set which is also

called "label" in this and has two options Sick and Healthy.

At very basic supervised

learning can be divided into 2 sub-classes called ‘classification’ and

‘regression’. One good example of classification is filtering spam and spam

emails. In this supervised learning model, we first trained the model with a

large number of example emails with their labels which makes the model able to

learn how to differentiate new emails. Another common task is to predict the

continuous values like the price of a car, house, or stock price prediction.

Let’s look at the most common supervised learning algorithms: -

- K-Nearest Neighbors

- Linear Regression

- Logistic Regression

- Support Vector Machines

- Decision Trees and Random Forests

- Neural Networks and deep learning.

Unsupervised Learning

In this process, we have a dataset but don’t have the labels that is we have unlabeled data. In its algorithms, classification or categorization is not added. We can also say that in unsupervised learning the machine tries to learn from itself without the help of supervision. In this, the machine tries to find useful insights or patterns from the data. Many recommendation systems are used to recommend movies and songs, next purchases are based on unsupervised learning. Clustering is also a good example of unsupervised learning. In this, we can say that the machine uses information that does not have labels or classifiers to allow it to act on that guidance or categories. In this, the main goal of the machine is to group the unorganized information according to similarities, patterns, and differences without any previous training of data.

|

Gender |

Age |

Weight |

|

M |

47 |

68 |

|

M |

68 |

70 |

|

F |

58 |

56 |

|

M |

49 |

67 |

|

F |

32 |

60 |

|

M |

34 |

65 |

|

M |

21 |

74 |

In the above table, we

have a dataset but don’t have any labels that help us in supervised learning.

Now in the above dataset, we can try to find the patterns or clusters among the

data. One such cluster can be dividing or sorting the data according to Gender

or also can be done using sorting the data into different age groups. Here are

the most common unsupervised learning and their algorithms: -

·

Clustering

o

K Means

o

DBSCAN

o

Hierarchical Cluster Analysis (HCA)

·

Anomaly detection and novelty detection

o

Isolation Forest

·

Visualization and dimensionality reduction

o

Principal Component Analysis (PCA)

o

Kernel PCA

o

Locally Linear Embedding (LLE)

o

t-Distributed Stochastic NeighborEmbedding (t-SNE)

·

Association rule learning

o

Apriori

o

Eclat

Reinforcement

Learning

It is very different from

both supervised learning and unsupervised learning. In this learning system, we

have an agent or bot that observes the environment and selects an action or

takes an action, this action then either gets a reward or penalty (penalty

meaning negative reward) the model must learn by itself which is the best

strategy for it and maximizes the reward. For example – like when we train

an animal, we reward them for doing a good thing or following our orders and

punish or do not reward them when they don’t follow the order this, we train the animal. We

can imagine the same thing for reinforcement learning. When the bot or agent

behaves well, it gets rewarded; when it behaves poorly, it gets penalized. One

excellent example of reinforcement learning is the AlphaGo software developed

by DeepMind. It is a Go player that made waves in newspapers in 2017 when it

defected the world champion. Through the analysis and self-play of games millions

of times, it learns its winning policies. It is different from supervised

learning in such a way that supervised learning is trained using the labels or

answers which is already available in training data; in contrast, in

reinforcement learning, the model does not have any answers. The model agent

chooses how to proceed with the assigned task. It employs what is known as the

trial-and-error approach. Algorithms that use reinforcement learning are

capable of learning from results and choosing the best course of action. Every

time it takes an action, it evaluates it and gets input from the algorithm to

help it decide if the decision is right or wrong.

Semi-Supervised Learning

Semi-supervised learning is a type of machine learning that falls between supervised and unsupervised learning methods and offers a

solution for situations where there is few labeled data compared to unlabeled data. This approach merges a small set of labeled data with a larger set of unlabeled data during its training process. A

notable example is Google Photos, where the system independently detects faces

from uploaded images, a form of unsupervised learning. However, it asks users

to tag these faces to improve accuracy and user experience, thus adding

supervised learning elements. This combination allows us to more

efficiently organize photos and search based on identified individuals.

Most algorithms that fall

within the category of semi-supervised learning incorporate aspects of both

unsupervised and supervised learning. Deep belief networks (DBNs) are composed

of layers of unsupervised restricted Boltzmann machines (RBMs). Before being

refined as a whole using supervised learning techniques, RBMs go through

several rounds of unsupervised training.

There are also other

types of machine learning types available like instance-based, model-based,

batch learning, online learning, etc.

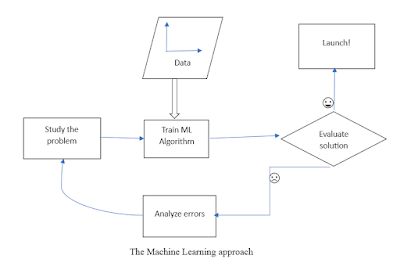

Machine Learning Lifecycle

The Machine learning

lifecycle involves a series of steps which are: -

Understanding the problem

– it is the first step of the machine-learning process. In this process, we

first try to understand the business problem and define its objective which is

what the model must do.

Data Collection – Once

the problem statement is established, the next step involves gathering the

relevant information needed to build the model. These data sets can be obtained

from a variety of channels, including databases, sensors, application

interfaces (APIs), or web capture technologies.

Data Preparation – only collecting data will not help to make the machine learning model work properly that is when our data is collected it is necessary to check that is, is the data is proper and then convert it into the desired format so we can use it in machine learning model and the model will able to find the hidden patterns. This process has its small sub-processes these are: -

- Data cleaning

- Data Transformation

- Explanatory data analysis and feature engineering

- Split the dataset for training and testing

Model Selection – Selecting the optimal machine-learning algorithm to address the problem comes next after preprocessing the data. Making this decision requires knowledge of the advantages and disadvantages of various algorithms. Many times, it is required to apply many models, evaluate their results, and then choose the best algorithm according to the particular needs of the our job.

Model building and

training – when we select the proper algorithm for our model we need to build

the model. It can be done using below three methods: -

- In the traditional machine learning building approach, we just need to fine-tune some hyperparameter tunings.

- In the field of deep learning, the first step involves sketching the architecture of each layer. This includes defining details such as input and output dimensions, number of nodes in each layer, choice of loss function, optimization of gradient descent, and other related parameters needed to build the neural network.

- At last, after the model is trained using the preprocessed dataset.

Model Evaluation – Once

the training phase is completed, assessing the model's efficacy involves

evaluating its performance on the test dataset. This assessment aids in gauging

accuracy and effectiveness through various methodologies such as generating a

classification report, calculating metrics like F1 score, precision, and recall,

and examining performance indicators like the ROC Curve, Mean Square Error, and

Absolute Error.

Model tuning – after the

training and testing are done, we have the results of the model on the unseen

dataset, now we might need to tune or optimize the algorithms’ hyperparameter

so we can get the optimized result or performance.

Deployment – Once the

model is optimized and its performance meets our expectations, we deploy it to

a production environment where it can make predictions based on fresh,

never-before-seen data. In this implementation phase, the model is integrated

into the existing software infrastructure, or a new system is developed that is

specifically adapted to implement the model.

Monitoring and Maintenance – Following deployment, it's critical to track the model's performance over time, keep an eye on the dataset in the production environment, and do any necessary maintenance. In this process, the model is updated if new data becomes available, retrained as necessary, and data drift is monitored for.

Main Challenges of Machine Learning

In the realm of machine learning, two critical factors often lead to suboptimal outcomes: flawed algorithms and inadequate data. Let's delve into each aspect separately.

Insufficient Training

Data:

To effectively train a

machine learning model, a substantial amount of data is essential. Simple

tasks, such as distinguishing between an apple and a ball, may require

thousands of examples, while more complex endeavors like speech recognition

might demand millions. Unfortunately, numerous fields suffer from limited or

unexplored data, hindering the development of robust machine-learning models.

Nonrepresentative

Training Data:

The quality of training

data is paramount for successful machine learning. It's imperative that the

data provided for training accurately represents the scenarios the model will

encounter in real-world applications. Failure to ensure representativeness can

result in a model that struggles to generalize effectively, leading to poor

predictions or outputs.

Poor-Quality Data:

A model cannot identify important patterns if it is trained on data that is full of mistakes, outliers, or noise. To improve model performance, time and effort must thus be spent cleaning and improving training data.

Irrelevant Features:

Feeding a model with relevant features is as important as saying "Garbage in, garbage out". Selected, extracted, and created features that allow the model to successfully understand the underlying patterns in the data are mostly dependent on feature engineering.

Overfitting:

When a model too closely fits the training data—including noise and outliers—it is said to be overfit. It may do well in training, but in real-world situations, it performs worse since it finds it difficult to generalize to unknown input.

Underfitting:

It occurs when a model does not identify important patterns in the training data, which leads to worse performance during training and when presented with new data..

Additional Limitations:

Machine learning thrives on data diversity and heterogeneity. Algorithms struggle to derive meaningful insights without a sufficient range of variations within the data. For efficient model training, sufficient sample sizes are usually at least 20 observations per group. Moreover, the availability of training data determines the usefulness of machine learning; in the absence of it, the model is idle. Machine learning projects are often hampered by the dearth or lack of variety in data.

In conclusion, successful implementation of machine learning in many fields depends critically on resolving problems with algorithmic defects and data constraints.

No comments:

Post a Comment