Python Pandas

Introduction

- Pandas provides Data Frame (like Spreadsheets) and Series (like columns) for data analysis, integrating with other libraries.

- Core Concepts of Pandas

- Advantages of Pandas

- Disadvantages of Pandas

- Best Practices for Using Pandas

NumPy and pandas are needed to comprehend Python's structural data handling therefore at least we need an understanding of the basics of numpy and pandas and therefore we need to learn Python numpy and pandas. Pandas will be explained in this chapter. Pandas make Python data cleaning and analysis fast and easy using data structures and manipulation capabilities. Pandas is often used with statsmodels, scikit-learn, matplotlib, NumPy, and SciPy. Pandas adopt NumPy's array-based functions and for loop-free data processing.

Pandas is built to work with tabular or heterogeneous data, yet it shares many capabilities with NumPy. NumPy excels at homogenous data. There are several features of numpy and pandas. The numpy and pandas library in Python is somehow the basic need for Python programming. If we use Python then there is a chance that we do data wrangling with pandas numpy and ipython.

Pandas became open source in 2010 and matured over time. It has many real-world use cases and is a large library. in this series of blogs, we learn about numpy and pandas. We usually import pandas using the following code:

import pandas as pd

Pandas library is referenced by pd in code. Series and DataFrame are commonly used, therefore importing them into the local namespace may be easier:

from pandas import Series, DataFrame

Real-world Example for Pandas

To better understand the workings of pandas let's look at a real-world example let's suppose we're working at a retail company in the post of data analyst, in this job our task is to identify the recent trends and make decision that benefits our company. To achieve this we use Python's pandas library because it can handle large amounts of data easily.

First, we import the Pandas library and load sales

data into a data frame. Pandas’ primary data structure. In the DataFrame each

row represents a sale, and each column contains information such as the product

sold, the sales amount, the customers’ details, and the date of the

transaction.

With Pandas, we can easily clean and preprocess

the data. We handle missing values, remove duplicates, and convert data types

as needed. For example, we might convert the date column to a datetime object

to facilitate time-based analysis.

Next, we perform various analysis operations on

data using Pandas’ powerful functionalities. We calculate key metrics like

total sales revenue, average order value, and customer retention rate. Pandas’ group-by

function allows us to aggregate data by different categories, like product

categories or customer segments, to gain insights into sales performance.

If we want then we can also use Pandas for time

series analysis, examining sales trends over different periods. We can compute

rolling averages, identify seasonal patterns, and detect anomalies in sales

data.

Furthermore, Pandas enables us to merge multiple

datasets and perform joint operations to enrich the sales data with additional

information, such as product descriptions or customer demographics, stored in

separate datasets.

We can also visualize our findings using libraries

like Matplotlib or Seaborn, leveraging Pandas’ compatibility with these

visualization tools to create insightful charts and graphs for presentation.

In the above real-world example, we see the situation we need to use pandas to solve our problems like also we need other Python libraries to solve our real-world problems. NumPy and pandas for data science and also numpy and pandas for machine learning are inseparable.

Introduction to Pandas Data Structure

To utilize pandas, we must know their two main data structures: Series and DataFrame. They did not solve all problems, but they are powerful, valuable, and easy to use for most purposes.

Series

Series are one-dimensional arrays that may sequence integers, texts, floating point values, Python objects, etc. The axis labels are integrated into an index. Most Series use merely an array of data.

Below is the series syntax and an example:

s = pd.Series(data, index=index)

Data can be a Python dict, ndarray, or scalar value (like 5).

Consider an example:

The interactive string representation of a series shows values on the right and the index on the left. Since we didn't define a data index, a default one of 0 through N – 1 is constructed. You can get the Series' array representation and index object from its values and index characteristics:

Making a Series with an index and labeling each data point is usual. Data in an ndarray must have the same index length as Series data.

Labels in the index can be used to choose a Series value or set. Look at this code:

Update or reassign the index value if desired. To update that index, we merely use the assignment operator. Look at its code:

Even though it contains letters instead of numbers, [‘a’, ‘c’, ‘d’] is regarded as a list of indices in the above code.

From Dict

The data pieces of a dictionary-created series are the values, and the dictionary keys are the index labels. It works like this:

Using an index to generate a pandas Series from a dictionary will extract and add items that match the labels in the index. Examine this more closely:

This dictionary has keys 'A', 'B', 'C', and 'D' that represent numbers between 10 and 40. The custom_index labels are 'B', 'C', 'E', and 'A'.

Pandas compare custom index labels to dictionary keys when producing Series using pd.Series(data, index=custom_index). If a label in the index matches a dictionary key, the Series receives the corresponding value. If the dictionary lacks a key for a label, Pandas adds a missing value—usually NaN, or "Not a Number"—to the index.

Here, values 20, 30, and 10 for labels 'B', 'C', and 'A' in the custom index is from the dictionary. Pandas added a missing value (NaN) to the Series for the custom index label "E" since the dictionary has no matching key.

With this behavior, we may retrieve dictionary values in the index label order we want. It helps synchronize data from several sources or reorder data.

From scalar value

When creating a pandas Series with a scalar value, we must specify an index. This index determines Series labels. After that, our scalar value will be repeated until it fits the index's length.

The process is summarized here:

Establishing Scalar Value: Start by defining a scalar number, which the Series should repeat.

Definition of Index: Next, specify an index. A label in this index will identify each Series element. Any labeled iterable, list of labels, or integer range can be used.

Once it obtains the index and scalar value, Pandas will repeat it as many times as needed to meet the index's length. Index-derived labels will be given to each repeat.

Beginning the Series: Finally, pandas create the Series using index labels and repeated scalar values.

This sample shows this method:

The index is ['A', 'B', 'C', 'D'], and the scalar value is 5. When constructing the Series using pd.Series(scalar_value, index=index), Pandas repeats the scalar value 5 four times and assigns each iteration a distinct label.

The scalar value 5 was repeated four times to match the index's length. A separate index label is associated with each iteration.

This strategy works useful for aligning data with labels or creating a constant-value Series.

Series is ndarray-like

Pandas series are sometimes called "ndarray-like" because The similarity to NumPy ndarrays makes it a good data structure for structured Python data. Like NumPy arrays, Series stores data in a single, contiguous block of memory for efficient processing and storage. Series supports integer-based indexing, slicing, element-wise operations, and iteration owing to this common feature.

Labeled indexing distinguishes Series from NumPy arrays. Unlike NumPy arrays, Series include indices for each element, thus labels may be used to retrieve data meaningfully and intuitively. With their labeled indexing, Series is easier to use and appropriate for tagged data sets that need meaningful labeling.

Series automatically aligns data during operations based on index labels, another key difference. When doing element-wise calculations or arithmetic operations, Series matches items by label rather than location. Automatic alignment reduces mismatched data mistakes and streamlines data management.

Labeled data series also group, merge, and manage missing values. These characteristics make Series a powerful Python tool for structured data collection and more advanced data manipulation and analysis procedures.

Despite these upgrades and additional features, Series retains NumPy ndarrays data storage, support for mixed data types, and properties like shape, dtype, and size. Because of its ndarray-like characteristics and advanced capabilities, series is a versatile Python data structure for data processing and analysis.

This code creates a pandas Series ‘series’ from a list ‘data’ with labeled index ‘index’. We show how to access data as a NumPy array (‘series.values’), check shape (‘series.shape’), and type (‘series.dtype’) like ndarray. Element-wise operations explain how the Series operates like a NumPy array. We use labels to access and slice items (‘series[‘B’]’ and ‘series[‘B’:’D’]’) to distinguish them from NumPy arrays. Creating a Series ‘series2’ with a different index and adding it to the existing Series ‘series’ shows how the Series align data depending on index labels during operations.

Pandas Series combines ndarray-like features with labeled data processing functions, as shown in the code. Series may be used as an input for most NumPy functions with ndarray behavior. However, slicing affects both values and the Series index.

NumPy dtype is typically used above. However, pandas and third-party libraries expand NumPy's type system, making the dtype an Extension of Dtype. Pandas have a nullable integer and category data.

Categorical data

The categorical data type in Pandas pertains to statistical category variables, where there exists a limited set of potential values. These variables represent attributes such as blood type, gender, social status, country of origin, duration of observation, and Likert scale ratings. They encompass discrete values that fall within predefined categories.

Unlike statistical categorical variables, categorical data cannot be added or divided. They may have an intrinsic order, as in "strongly agree" vs "agree," or "first observation" versus "second observation." This order shows the categorical values' hierarchy, allowing comparisons.

Categories or missing values are represented by np.nan in categorical data. Category order specifies succession, unlike lexical order of values. The data structure has two primary components: an integer array of codes representing each value's location in the categories array and an array of categories. This design streamlines categorical data manipulation in the data structure.

The following circumstances require category data:

Categorical variables are string variables with limited values. A string variable with a limited range of values can be converted to a categorical one to save memory. This conversion optimizes memory while preserving categorical variable functionality and structure.

Examples of changeable lexical and logical order include "one," "two," and "three." Once such variables are categorized and ordered, sorting and min/max operations will follow the logical order rather than the lexical one. The defined categorical order is used for operations instead of the usual lexical order of values.

To tell other Python libraries to treat this column like a categorical variable, utilizing plot styles or statistical methods.

Dtype= “category” sets the dtype as a category in a series. Let's see the code.:

Converting a Series or column to a category data type in pandas may assist if the column represents categories or groups and has a finite number of unique values. Making such columns categorical data types speeds up value_counts and groupby and conserves memory. Change the data type of a series or column to a category.

In the above code:

An initialized DataFrame called 'df' contains a column called 'category' with categorical data represented by strings 'A', 'B', and 'C'. We use the 'astype()' function to convert the 'category' column into a category data type with the input 'category'. We next publish the data types of all DataFrame columns to confirm that the 'category' column has transitioned into a category data type.

The converted 'category' column is now 'category' type. This may improve performance and memory efficiency, especially in large datasets with repetitive category columns.

A pandas.Categorical objects can be assigned to a Series or DataFrame to transform a Series or column into a categorical data type. This method gives us more category and sorting control. The work is completed methodically in this manner.

This code defines sample data and categories, then creates a Series by giving the ‘pd.Categorical’ output to ‘pd.Series’. This builds a categorized Series. We return this Series to the DataFrame's ‘category’ column. We verify the DataFrame's data types to ensure the ‘category’ column is of type ‘category’.

Let’s see another code example:

If we want or need the actual array backing in a Series then we need to use Series.array. let’s see it code example.

When executing operations without index consideration, accessing the array directly might be helpful. All Series have ExtensionArrays in them.array attributes. Pandas can easily store ExtensionArrays in Series or DataFrame columns. ExtensionArrays are lightweight enclosures for concrete arrays like numpy.ndarrays.

Series is similar to ndarray, however we can use Series.to_numpy() to get a genuine ndarray. See its code sample.

Even if the Series is backed by an ExtensionArray, Series.to_numpy() will return a NumPy ndarray.

Series is dict-like

A Series and a fixed-size dictionary are contrasted in Python for index label access and manipulation. A Series is like a fixed-size dictionary in the following ways:

A Series labels each entry with an index label, like a dictionary. Identifiers provide each Series piece a unique identification.

Accessing Values: We may access dictionary and series values by index label. We may access the value of a Series using square brackets ([]) and the index label.

Value-setting: Both data formats allow index label-based value setting. Like updating a dictionary key-value combination, we may assign a new value to a Series index label using the assignment operator (=).

Key-Value Relationship: Each index label is a key and the related element is the value in a Series.

Fixed-Size: "Fixed-size" describes the Series or dictionary's initial size. Once configured, the number of items (key-value pairs) can be added, altered, or removed.

Comparing a Series to a fixed-size dictionary shows how index labels make data easy to access and modify, making the Series a versatile data structure for Python data manipulation applications.

Pandas Series works like fixed-size dictionaries. Index labels are like dictionary keys for retrieving and editing components. Use s['a'] to get the index label 'a' value. The appropriate element is updated by setting s['e'] = 12.0. We may check index labels with the in operator: False for labels like "f" that don't exist, but true for "e".

When trying to access a label not in the Series index, an exception is triggered. Check this in code:

Series.get() retrieves the Series index label value. If the label is missing, it returns None or a value.

s.get('f')

s.get('f', np.nan)

nan

S.get('f') retrieves the value attached to the label 'f' from the first line's Series. Nothing is returned by default because 'f' is not in the index.

The second line uses s.get('f', np.nan) to accomplish the same thing but uses np.nan as the default value if the label is absent. Since 'f' is not in the index, the algorithm returns np.nan.

Vectorized operations and label alignment with Series

Pandas Series' vectorized operations and label alignment automatically align objects based on index labels, simplifying data processing. A description and example code follow:

Operations vectorized: Pandas Series' vectorized operations allow element-wise operations on complete Series, like NumPy arrays. By employing optimized C libraries, these operations are efficient.

Align Labels: Label alignment ensures Series operations follow index labels. Pandas align components by index label before operating between Series. Therefore, similar objects with matching labels are treated together, whereas mismatched labels are treated gracefully.

The presented code shows vectorized operations and label alignment in the pandas Series. First, we need to create two Series objects, s1 and s2, these objects have different values and also different index names. In s1, "a," "b," and "c" have values of 1, 2, and 3, on the other hand in s2, it have 10, 20, and 30. Pandas align s1 and s2 by index label when they are added. The outcome Series includes acceptable items from both Series with corresponding labels. For example, when "b" appears in both Series, 2 from S1 and 10 from S2 are combined, yielding 12. Due to mismatched labels, the resultant Series contains NaN values, which indicate missing data. For example, 'a' in s1 and 'd' in s2. Pandas handles label alignment in vectorized operations easily, ensuring exact and intelligible calculation for Series with different indices.

Members with matching index labels 'b' and 'c' were added to the Series. Mismatched labels 'a' and 'd' produced NaN values in the output, demonstrating label alignment.

Series operations automatically align data by label, distinguishing them from ndarray. Therefore, you may compute without worrying about Series labels matching.

Pandas automatically align components by index label when conducting operations across Series with different indices. This means the Series will include the union of the two Series' indices. The result will show NaN for labels that are present in one Series but not the other. This allows easy data processing without explicit alignment. Users have a lot of freedom and flexibility in interactive data analysis and research since they may focus on their operations rather than data alignment. Pandas' built-in data alignment algorithm makes it a powerful and easy-to-use data manipulation tool for labeled data.

Name Attribute

Pandas Series' name property provides a descriptive description or name. It labels the Series object, not individual Series elements.

We can name a Series when we build it. This name can help explain Series data or context. When dealing with several Series or integrating Series with other data structures, it helps keep our code clean and structured.

We may alter the name attribute by directly setting it (series.name = "MySeries") or using dot notation. The name property defaults to None.

A simple code example:

This example creates a Series named "MySeries" and then changes its name attribute. This makes identifying and labeling Series in analysis and code easy.

Series names can often be assigned automatically. The Series immediately inherits the label of a DataFrame column when selected.

We may rename Series with Pandas.Method Series.rename().

Note: here s and s2 refer to different objects.

DataFrame

Two-dimensional labeled data frames can have various columns. Similar to a SQL table, spreadsheet, or Series object dictionary. Pandas' DataFrame is widely used. DataFrame accepts multiple inputs like Series:

A dict of 1D ndarrays, lists, or Series 2-D numpy.ndarray

Along with data, we may provide indices (row labels) and columns (column labels) in a DataFrame. The DataFrame will have the provided index and/or columns. When utilizing a dictionary of Series with an index, all data that does not match will be omitted.

Pandas DataFrames are two-dimensional labeled data structures in Python. It resembles a spreadsheet or table with rows and columns. Each row represents one observation or record, whereas each column represents a variable or characteristic.

Key Pandas DataFrame characteristics:

A tabular structure: Data is tabular, with rows and columns forming a grid. Working with and analyzing structured data sets becomes natural.

Labeled Axes: Rows and columns have labels. The DataFrame columns property stores column labels, whereas the index stores row names. These labels provide data access and modification identifiers.

Pandas DataFrames support columns with many data types, unlike NumPy arrays, which only hold one data type. We can employ numerous sorts of data in a DataFrame due to its adaptability.

DataFrames automatically align data by label. When using several DataFrames, pandas automatically align the data by index and column names.

DataFrames has powerful data cleansing, manipulation, analysis, and visualization tools. This includes missing value management, data grouping and aggregation, DataFrame merging and joining, indexing and slicing, and more.

Overall, pandas DataFrames are a powerful and flexible Python tool for structured data. Their user-friendly interface, vast capabilities, and effective performance make them popular in data science, machine learning, and data analysis.

From dict of Series or dicts

The last pandas index When creating a DataFrame from a dictionary of Series or dictionaries, the indices of each Series are merged. This means the DataFrame's index will include any Series' unique index label.

When nested dictionaries are converted to Series, the DataFrame is produced. This conversion ties every key-value pair in layered dictionaries to a separate DataFrame Series.

If no columns are specified, the DataFrame will use the ordered list of dictionary keys. The DataFrame's column names will be the outer dictionary's keys, and their order will be preserved.

Breaking down the procedure:

Making an Index: The final DataFrame index will be the dictionary's Series indexes merged. This ensures every Series has a unique index label in the DataFrame.

If present, the original dictionary's nested dictionaries will be converted into Series before creating the DataFrame. A Series in the DataFrame is ensured for every key-value pair in the nested dictionaries.

If no columns are specified, the outer dictionary's sorted keys will construct the DataFrame's columns. This means the keys will remain in order and become the DataFrame's column names.

Follow these steps to ensure Pandas' DataFrame, replete with column names and index labels, matches the original dictionary of Series or dictionaries. This functionality simplifies converting dictionary data into DataFrames for efficient data management and analysis.

In the above code:

A dictionary called ‘data’ has three Series: ‘A’, ‘B’, and ‘C’. Each Series has values.

We next generate a DataFrame ‘df’ from the dictionary ‘data’ using ‘pd.DataFrame()’.

Printing the DataFrame displays its contents.

Check out our coding. We label three Series, 'A, 'B, and 'C,' in a dictionary data set. Series represent data columns in the DataFrame. When we use pd.DataFrame(data), Pandas aligns the index labels of each unique Series. This DataFrame has the same index since the Series' indices are the same (implicit integer indexes starting at 0).

The dictionary keys, "A," "B," and "C," are the DataFrame's column names, and the corresponding values are the data. The dictionary key order matches the DataFrame column order. This method explains how pandas create a DataFrame from a dictionary of Series, ensuring that it accurately captures the dictionary's contents.

From dict of ndarrays/lists

A pandas DataFrame built from a dictionary of NumPy arrays or lists contains a column for each key-value pair. Data in each column is represented by arrays or lists, and keys are column names. Look at the code to understand.

In the above code:

We create a dictionary named ‘data’ with three keys (‘A’, ‘B’, ‘C’) and NumPy arrays or lists for each column.

We next generate a DataFrame ‘df’ from the dictionary ‘data’ using ‘pd.DataFrame()’.

The DataFrame is printed to display its contents.

This code creates a DataFrame with three rows and three columns ('A, B, and C') containing dictionary data.

This technique quickly converts structured data from NumPy array or list dictionaries into pandas DataFrames, making tabular data editing easier.

Another example with keys and indexes in two stages:

From structured or record array

The pandas DataFrame produced from the structured or record array translates every field to a column. The field names become the column names, and the data in each field becomes the column data.

A structured array, sometimes termed a record array, is an array with several fields or properties, like a database entry. You may access these fields by name and store a variety of data. Let's examine an example code to understand:

In the above code example:

We create a structured array of ‘data’ with three fields: ‘ID’, ‘Name’, and ‘Age’. One DataFrame column is represented by each field.

From the structured array ‘data’, we generate a DataFrame ‘df’ using ‘pd.DataFrame()’.

The DataFrame is printed to display its contents.

This code displays the DataFrame columns 'ID', 'Name', and 'Age'. Each row of data represents a structured array element.

Pandas' structured arrays may convert structured data from databases or files into tabular DataFrames for analysis and manipulation.

From a list of dicts

The pandas DataFrame constructed from the list of dictionaries contains a row for each dictionary. Each dictionary's columns are named after their keys and contain matching values.

An example of creating a DataFrame using dictionaries follows:

In the above code:

Our list of ‘data’ has three dictionaries, each representing a row of data.

Each dictionary includes ‘ID’, ‘Name’, and ‘Age’ entries for DataFrame column names.

The ‘pd.DataFrame()’ method creates a DataFrame ‘df’ from the list of dictionaries ‘data’.

Printer the DataFrame to display its contents last.

This code will generate a DataFrame with three columns ('ID,'Name,'Age') and three rows of data (each row representing a dictionary in the list).

JSON files or APIs may be readily converted to tabular data for analysis and manipulation using pandas DataFrames and dictionaries.

From a dict of tuples

A pandas DataFrame built from a dictionary of tuples contains a column for each key-value pair. The keys name the columns, and the tuples attached to each key contain column data. The code below creates a DataFrame from a tuple.

In the above code:

DataFrame requires the pandas library, which we import first.

A dictionary called 'data' stores the dataset. Each key in the dictionary is a column name, and its value is a tuple of data elements.

Each ‘data’ dictionary key-value pair is explained with comments.

Pandas' ‘pd.DatFrame()’ function creates the DataFrame ‘df’ from the dictionary of tuples.

Printing the DataFrame displays its contents.

This code displays the DataFrame columns 'ID', 'Name', and 'Age'. The three rows of data are dictionary tuples.

Pandas can create DataFrames from dictionaries of tuples to easily convert structured data into tabular representation for manipulation and analysis.

From a Series

The DataFrame will have the same index as the input Series and one column with the Series name (unless another column name is specified).

A Series becomes a column in a pandas DataFrame. The Series index becomes the DataFrame index, and its entries occupy the associated column.

An example of creating a DataFrame from a Series follows.

In the above code:

We construct a pandas Series ‘s’ with values ‘[1, 2, 3, 4]’. We name the Series ‘number’.

The ‘pd.DataFrame()’ method creates a DataFrame ‘df’ from the Series ‘s’.

A DataFrame ‘df’ with a ‘Numbers’ column contains values from the series ‘s;.

DataFrame ‘df’ index is automatically set to Series ‘s’ index.

The DataFrame is printed to display its contents.

This code creates a DataFrame with four rows, each representing a Series element, and one column, "Numbers."

We may use a Series to create a DataFrame from a single column of data for analysis and customization.

From a list of namedtuples

A pandas DataFrame constructed from namedtuples uses the field names of the first namedtuple to name its columns. Each namedtuple in the list below is a DataFrame row. The values from each namedtuple are unpacked and supplied into the DataFrame columns by field name.

If a namedtuple contains fewer fields than the initial one, the succeeding columns in the row are NaN. This assures that every DataFrame row follows the first namedtuple's column structure.

Any namedtuple with more fields than the first raises a ValueError. The DataFrame requires each row to have the same number of columns as the original namedtuple, indicating an inconsistent column structure.

This program converts a namedtuple list into a DataFrame tabular format. It also ensures column structure consistency across rows and adapts to varying tuple lengths.

In the above code:

The namedtuple class from the collections module and pandas library is imported as "pd." Next, we define the namedtuple structure "Person," with fields "name," "age," and "city." Each data row's structure is shown. Next, we create a "data" list of "Person" instances. Each namedtuple has a person's name, age, and city. From the list of namedtuples "data," "pd.DataFrame()" generates "df." The first namedtuple ('Person') field names determine the DataFrame columns. Finally, we print the DataFrame to view its contents.

This code creates a DataFrame with three columns ('name, age, and city') and three data rows (each row being a namedtuple). Each namedtuple's values are unpacked and placed in the DataFrame's matching columns to match the first namedtuple's field names.

From a list of dataclasses

With Data Classes in PEP 557, data storage classes may be defined with minimal boilerplate code. These classes, which resemble dictionaries but include additional properties and type hints, are often used to represent ordered data.

The pandas DataFrame constructor accepts a list of data classes. The attributes of the data class will represent the columns of the DataFrame, and each instance of the data class in the list will represent a row.

Remember that the DataFrame constructor should only receive instances of the same data class. Pandas returns a TypeError if each row has several data types.

Data classes in the pandas DataFrame constructor provide for clearer, more ordered structured data representation. Data classes are like dictionaries but include specified properties and type hints.

In the above code:

Our "dataclass" decorator and pandas as "pd" come from the "dataclasses" module. Create a "Person." data class using the "@dataclass" decorator. This class has "name," "age," and "city." Next, we create a 'Person' instance to store personal data. After that, we list these occurrences as "data." The 'pd.DataFrame()' method generates a DataFrame 'df' from the data class instances. In the DataFrame, data class attributes are rows and instances are columns. Printing the DataFrame last displays its contents.

This code will create a DataFrame with three columns ('name, age, and city') and three rows ('Person data class instances'). This approach uses data classes to define features and type clues to create a systematic and organized DataFrame description.

Missing data

Pandas provides two DataFrame construction alternatives for missing data. Popular pandas methods include np.nan, which represents missing or undefined values. As an alternative, pass a numpy MaskedArray to the DataFrame constructor. The maskedArray items will be considered missing values.

Alternate constructors

DataFrame.from_dict

Use pandas' DataFrame.from_dict() method to create a DataFrame from a dictionary of dictionaries or an array-like sequence. It works like the DataFrame constructor with a few exceptions.

DataFrame.from_dict() defaults to values as column data and outer dictionary keys as column names. A dictionary of dictionaries sent to the normal DataFrame constructor behaves similarly.

But DataFrame.from_dict() can take an extra parameter called orient. Orient is set to 'columns' by default, making column names outside dictionary keys.

If the orient is 'index', the outer dictionary's keys produce row labels instead of column names. Each row's data comes from dictionaries or array-like sequences.

In conclusion, DataFrame.from_dict() makes it easy to generate DataFrames from dictionary-like data structures, and the orient argument lets you pick row labels or column names for keys.

In the above code:

Two dictionaries, "data_columns" and "data_index," indicate different data orientations. In the first example, "DataFrame.from_dict()" creates a "df_columns" DataFrame with the default "columns" orientation. The inner dictionaries give column data, while the outside dictionary keys form column names. The second example creates "df_index." using "DataFrame.from_dict()" and the "index" orientation. This example uses inner dictionaries for row data and outer dictionary keys for row labels. Finally, we print both DataFrames to inspect their contents.

DataFrame.from_records

Pandas' DataFrame.from_records() function creates a DataFrame from a list of tuples or a structured NumPy ndarray. This method works like the DataFrame constructor, with a few exceptions.

The list of tuples or structured ndarray represents the data to be included in DataFrame.from_records(). The DataFrame columns are filled with tuples or ndarray values. Each ndarray row matches a DataFrame row.

One key difference is that the resulting DataFrame index might be a structured data field. You can specify a structured data type field instead of the DataFrame's integer index.

DataFrame.from_records() lets you define the index field and create a DataFrame from structured data with the flexibility and power of the normal constructor.

In the above code:

A row of data is represented by each tuple in our list. Tuple values match DataFrame columns. The column names are in a list we define. A DataFrame df is created from the list of tuples data using DataFrame.from_records() and the columns argument with the column names. Printing the DataFrame last displays its contents.

A DataFrame with three rows ('Name, Age, and City') and three columns will be created using this code. Column names and the default integer index are specified for the DataFrame.

Column selection, addition, deletion

Pandas DataFrames are dictionaries with Series objects as data columns. This means working with a DataFrame is like using a Series dictionary.

Getting Columns: The same method may be used to retrieve a DataFrame column using its key. To get the Series object, use the column name as the key.

Column Setup: You can set a new column in a DataFrame dictionary-like structure by assigning a Series or array-like object to a new key (column name). This technique inserts or updates columns.

Delete Columns: Deleting DataFrame columns is like deleting dictionary entries. Use del and the column name to delete a DataFrame column.

DataFrame objects are semantically equivalent to dictionaries of Series, making them easy to use, especially for Python users. It simplifies data processing and provides syntactic consistency.

Column Selection

Put column names in square brackets [] to pick them from a DataFrame. We choose columns by name. A list of column names is needed to choose numerous columns. We may also pick a single column using the dot notation "." but not if the column name contains spaces or other special characters.

Column Addition

A Series or list can be given the new column name to add a column to a DataFrame. Enter the new column name in square brackets "[]," then assign the Series or list of values.

Column Deletion

We may delete a DataFame column using 'drop()' or 'del'. For "drop()" to work, name the column and specify "axis=1" for column-wise operation. To use "del" just provide the column name after "del".

Column Renaming

Rename columns in a DataFrame using pandas' rename() method. You may change column names using a mapper function or dictionary.

Renaming with a Dictionary

The rename() function accepts a dictionary containing new column names as values and current column names as keys. A dictionary uses old column names as keys and new names as values. Rename() renames the DataFrame and produces a new one with the updated column names. Set inplace=True to modify the existing DataFrame.

Renaming with a Mapper Function

Alternatively, we may use a mapping function to rename the columns. The mapper function should return the new column name for each current column name. The mapper parameter of rename() receives this function. The rename() method applies the mapper function to each column name and produces a new DataFrame with the updated names. Setting inplace=True lets us change the DataFrame again.

In conclusion, the rename() method lets you use a mapper function or dictionary to rename DataFrame columns. This feature can resolve dataset naming problems, align conventions, and improve column names.

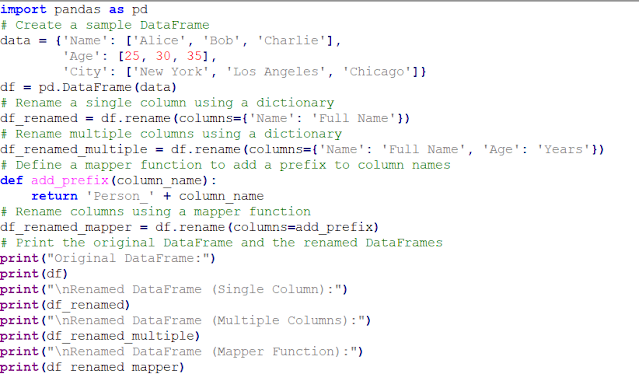

In the above code:

An example DataFrame ‘df’ has columns ‘Name’, ‘Age’, and ‘City’. Then we rename columns three ways using 'rename()':

- Rename a single column ‘Name’ to ‘Full Name’.

- Renaming multiple columns ‘Name’ to ‘Full Name’ and ‘Age’ to Years’.

- Using a mapper function ‘add_prefix()’ to add a prefix ‘person_’ to all column names.

Each renamed DataFrame is printed to observe the changes.

Column Insertion

The insert() function adds a column to a pandas DataFrame at a specific position. It lets you move columns to the right to add a new one.

A detailed explanation of insert() follows:

Specifying Position

Insert() adds a column to a pandas DataFrame at a given place. Move columns to the right to add new ones.

Insert() is explained in full below.

Adding Column Name and Values

Using the column parameter, we name the new column. Set the new column values with the value parameter. A scalar, list, NumPy array, or pandas Series can be used as values.

Shifting Existing Columns

After inserting the new column, insert() moves the existing columns to the right to create room. The columns to its right are shifted one place to the right to create a way for the new column.

In-Place Modification

Insert does not update the DataFrame by default. Returns a new DataFrame with the additional column. Set inplace=True to modify the existing DataFrame.

In conclusion, the insert() function precisely adds a new column to a DataFrame at a specified spot, allowing controlled composition adjustment. It helps when we need to add a column while preserving the column order.

The code sample below explains how to insert a column into a DataFrame using insert() and loc indexer. The code includes examples of specifying the location, column name and values, and operation in situ.

In the above code:

We create a 'df' DataFrame with 'Name' and 'Age' columns. We next use the 'insert()' function to add 'Gender' and 'City' columns to the DataFrame at specified places.

- Inserting the ‘Gender’ column at position 1 moves the ‘Age’ column right.

- The ‘City’ column is placed at position 2, pushing the ‘Age’ and ‘Gender’ columns right.

The new columns are inserted with scalar and list values, respectively. Then we display the modified DataFrame to observe the changes.

Indexing/selection

Python indexing fundamentals.

In pandas, we may use df[col] to extract a column from a DataFrame. This function returns the requested column values as a Series object.

The supplied code snippet generates an example DataFrame called 'df' with columns for 'Name', 'Age', and 'City'. To extract the 'Name' column from this DataFrame, use 'df[Name]'. This operation creates a Series object with 'Name' column values. The Series is then allocated to 'name_series'. Finally, we print 'name_series' to show the 'Name' column values and indices.

By label, select row: The pandas loc indexer selects rows from a DataFrame by label (index value). The row values from df.loc[label] are returned as a Series. Df is the DataFrame and label is the desired label.

Indexing by label: Use 'loc' to select a row label. This is the most common 'loc' use. Labels can be strings, tuples, or numbers depending on the DataFrame's index.

Select one row: 'df.loc[label]' picks the row with that label when given a single label. The selected row is returned as a Series with the DataFrame's column index.

Series Representation: The column names index the returned Series, which contains the row data. Every Series value matches the specified row.

Error handling: A "KeyError" is generated if the label is not in the DataFrame's index. We must ensure that the DataFrame's index contains the selected label to avoid complications.

Used with MultiIndex: If the DataFrame has a MultiIndex, we may pass a tuple of labels to 'df.loc[label]' to pick rows by hierarchical index level.

To conclude, df.loc[label] is a useful way to select DataFrame rows by label. It returns row values as a Series, making row data handling in pandas easy.

In the above code:

We start by constructing an example DataFrame ‘df’ with two columns (‘Name’ and ‘Age’) and a custom index (‘A’, ‘B’, ‘C’). Displaying the original DataFrame shows its structure. The row with the label ‘B’ is selected using ‘df.loc[‘B’]’. Variable ‘selected_row’ stores the selected row as a Series. Finally, we show the selected row's ‘Name’ and ‘Age’ columns for the row labeled ‘B’. The series index shows column names (‘Name’ and ‘Age’).

Row selection by integer location Pandas' iloc indexer selects DataFrame rows by integer position. When using df.iloc[loc], where df is the DataFrame and loc is the integer location, the row values that match loc are returned in a Series.

Check out the complete explanation of ‘df.iloc[loc]’:

Indexing using integers: The principal use of iloc is integer-based indexing, where we specify the row's integer position. The first row has integer positions at 0, and each row after that increases by 1.

When provided a single integer location, Df.iloc[loc] selects the row at that place. The selected row is returned as a Series with the DataFrame's column index.

The column names index the returned Series, which contains the row data. Every Series value matches the specified row.

Error Handling: If the integer position is less than 0 or more than the DataFrame's rows, an IndexError is produced. The number point you specify must fit inside the DataFrame's row positions.

To conclude, df.iloc[loc] is a useful way to select DataFrame rows by numeric location. It returns row values as a Series, making row data handling in pandas easy.

Code above: First, import pandas and create df, an example DataFrame. A dictionary of data is used to build the DataFrame df, with each key representing a column name (such as "Name" and "Age") and the values list the column information. To view the original DataFrame, use print(df). We choose a DataFrame row by integer location using df.iloc[1]. In this scenario, iloc[1] selects the second row in 0-based indexing, integer location 1. The selected row is in selected_row. Finally, print(selected_row) displays the chosen row with numbers.

Slice rows: Pandas slices DataFrame rows using square brackets ([]) and row indices. Use df[5:10] to create a new DataFrame with those rows. It selects data from 5 to 10 (excluding 10).

Slice Operation—We want to choose a slice of rows from DataFrame df, represented by df[5:10]. The range (5:10) represents the slice's row indices.

Boundaries - Python slicing includes the row at index 5 since the start index is inclusive. The end index (10 in this example) is exclusive, therefore the slice won't contain the row at index 10.

A new DataFrame with rows from the old DataFrame df with indices from 5 to (but not including) 10 is the result of the slice operation df[5:10]. This DataFrame has the same column structure as before.

This code sample slices DataFrame rows using Python's slicing syntax [5:10]. An explanation:

DataFrame creation is required. Dictionary data is used to generate an example DataFrame, df. Both the "Name" and "Age." columns have matching values. Original DataFrame View Printing the original DataFrame df shows its contents. Cutting Rows: Df[5:10] slices the DataFrame df to locate elements from index 5 to index 10 (excluding index 10). We choose rows with indices 5, 6, 7, 8, and 9. Making a Sliced DataFrame: Select rows are placed in sliced_df, a DataFrame. Finding and showing chosen rows in sliced DataFrame: Display the sliced DataFrame with print(sliced_df).

The function outputs the original DataFrame df and the sliced DataFrame sliced_df, which comprises rows 5–9 (inclusive).

To conclude, we may slice a DataFrame [5:10] and its integer indices to retrieve a certain range of data. DataFrame rows may be subset quickly and efficiently in pandas.

Row selection using Boolean vector: Pandas filters rows from a DataFrame based on a boolean vector using a boolean expression. When you use df[bool_vec], where df is the DataFrame and bool_vec is a Series or list of boolean values, it chooses only rows with True values. A new DataFrame with filtered rows is created.

This is how df[bool_vec] works:

The Boolean Vector The bool_vec vector contains True or False values for each DataFrame row. When the criteria are False, exclude the row; when True, choose it.

Row Filtering: The DataFrame's rows are filtered using df[bool_vec], a boolean vector. Only rows with True bool_vec values are in the DataFrame.

We take a chunk of the initial DataFrame and keep only rows with True boolean vector values to build a subset. The generated DataFrame has the same column arrangement as the original.

Using a DataFrame df, we store five people's "Name" and "Age." Bool_vec is a boolean vector with True values for rows to be chosen ([True, False, True, False, True]). Using df[bool_vec], we filter DataFrame rows by boolean vector. The DataFrame filtered_df only contains rows with the correct bool_vec value. Finally, we export the filtered DataFrame to examine the selected rows.

Transposing

Pandas may transpose DataFrame rows to columns and vice versa. This operation flips the DataFrame's axes from row to column. Two DataFrame transposing techniques are common:

T attribute access: The DataFrame property T is transpose. Use this attribute (df.T) to flip DataFrame rows and columns. The returned DataFrame converts rows to columns and vice versa. Instead of changing the DataFrame, this technique transposes it.

Transpose() method: A similar transposition may be done with DataFrame's transpose() function. DataFrames can use df.transpose() to get the T attribute. Like the T property, transpose() returns a transposed DataFrame without affecting the original.

Both methods get the same results. They provide several DataFrame transposing choices, so you may choose the easiest one.

Look at the DataFrame transposing code:

Transposed_df = df.T OR df.transpose()

Transposed_df is a new DataFrame that reverses rows and columns. This method is notably useful for comparing properties across several observations in organically transformed data.

Both methods create the identical transposed DataFrame with rows as columns and vice versa. Names and ages were changed to match the new axis orientation.

DataFrame interoperability with NumPy functions

The ability to seamlessly mix pandas DataFrames with NumPy operations and functions is called "dataframe interoperability with NumPy functions". This compatibility is possible because pandas are based on NumPy, which allows DataFrames to interface with NumPy arrays and use NumPy's many mathematical and array manipulation capabilities.

NumPy functions and DataFrame compatibility are explained in detail:

When working with DataFrames and NumPy methods, Pandas automatically turns them into NumPy arrays. This translation lets NumPy functions work on DataFrame data like arrays.

NumPy functions tuned for DataFrame interoperability enable efficient numerical computations for Pandas. DataFrame operations may be performed quickly and efficiently using NumPy algorithms, especially for large datasets.

Integration with NumPy Methods: DataFrame objects may be directly applied to several NumPy methods, including mathematical calculations (np.sum, np.mean) and array manipulation functions. These methods provide powerful data editing and analysis options for DataFrame values or axes.

Broadcasting: NumPy's broadcasting concepts apply to pandas DataFrame-NumPy array interactions. Based on NumPy's broadcasting standards, functions using DataFrames and variably shaped arrays are efficiently and automatically aligned.

Data analysis and manipulation are enhanced when DataFrame functions are NumPy-compatible. It lets pandas users use NumPy's large ecosystem of statistical functions, linear algebra computations, and more.

DataFrame interoperability with NumPy operations improves pandas' data processing and scientific computing. Blending NumPy and pandas to perform complex operations on structured datasets makes data manipulation easy and flexible.

Accessing Files

Pandas often read data from CSV, Excel, SQL, JSON, and other files. Users can browse pandas files and import data into DataFrames or Series using library methods.

File access in pandas is explained here:

Select File Type: Select the file type to open. Pandas supports CSV, Excel, SQL, JSON, HTML, HDF5, Parquet, and others. File types can be read using different functions.

Use File Reading: Pandas has multiple file-reading features. These are typical:

We read CSV data into a DataFrame using pd.read_csv().

Excel data is read into a DataFrame using pd.read_excel().

pd.read_sql(): Reads SQL data into a DataFrame.

A DataFrame is loaded from a JSON file using pd.read_json().

Use pd.read_html() to read HTML tables into DataFrames.

From HDF5 files, pd.read_hdf() loads data into a DataFrame.

pd.read_parquet(): Read Parquet files into DataFrames, etc.

URL or File Path: The argument to the file reading function is the file path or URL. It might be "https://example.com/data.csv" or "data.csv" for a local file path.

Adjust settings: We may customize the import process by changing file reading function parameters. Delimiter characters, column names, data types, encoding, header rows, skipping rows, and more can be chosen based on our data demands.

Put Data in Variable: Put the returned DataFrame (or any pandas data structure) in a variable. We may use pandas functions and methods to analyze file data in this variable.

Explore and Analyze Data: After loading data into a pandas data structure, we may utilize groupby(), plot(), head(), info(), description(), and others to explore and analyze the data.

In conclusion, using pandas to access files requires selecting the right file reading function, supplying the file path or URL, adjusting the parameters, and assigning the returned data to a variable for further modification and analysis.

Pandas' pd.read_csv() method reads CSV data into a DataFrame. The main parameter is the CSV file location or URL. Customize the import process using various settings. The delimiter, column names, index, data types, and missing entry handling are examples. The function returns a DataFrame with CSV data, which may be analyzed using pandas functions and methods. Overall, pd.read_csv() provides a simple and flexible way to import CSV tabular data into Python for analysis.

The pandas method pd.read_excel() reads Excel data into a DataFrame. Its major parameter is the Excel file path or URL. Customize the import process using optional options. These include the sheet(s) to read, column names, index, data types, and missing value handling. The method returns Excel sheet data as a DataFrame. Explore and examine the DataFrame using Pandas functions and methods. If the Excel file includes several sheets, we can choose which ones to read using the sheet_name option. Using pd.read_excel() to import tabular data from Excel spreadsheets into Python for analysis and modification is simple and efficient.

pd.read_sql() reads SQL data into a DataFrame in Pandas. Through a SQL query and straight fetching the result set into a pandas DataFrame, it simplifies database connections. SQL query text and database connection engine are the function's inputs. Customize the import process using optional options. These include data types, index column definitions, and SQL query placeholder parameters. After fetching data into a DataFrame, Pandas functions and methods may explore, analyze, and operate with it. Data analysis, visualization, and database extraction are made easier with pd.read_sql() in Python.

Pandas' pd.read_json() method reads JSON data into a DataFrame. JSON data may be imported into Python for analysis and modification. The function turns JSON data into a pandas DataFrame from the file path or URL. Parameters like JSON structure orientation, column data types, and missing values may be set to personalize the import process. JSON data imported into a DataFrame may be browsed, analyzed, and processed using pandas methods and functions. JSON-formatted data is utilized in many applications and online services, and pd.read_json() is useful for working with it.

pd.read_html() converts HTML tables into pandas DataFrames. It simplifies internet scraping and Python tabular data input by automatically identifying and parsing HTML tables. The function receives a URL or HTML content and a collection of DataFrame objects representing web page tables. Additional factors like the table index or header rows might customize the import process. Using pd.read_html() to extract structured data from internet sites helps with analysis and visualization. Web data can be simply linked into Python data analytic procedures.

Pandas' pd.read_hdf() method reads HDF5 data into a DataFrame. HDF5 is a versatile file format designed to hold large, complex information. Users may rapidly import HDF5 data into Python for analysis and modification using pd.read_hdf(). The function accepts the HDF5 file location and returns a DataFrame with its data. To customize import, utilize optional options. Define column data types, handle missing values, and indicate where data should be stored in the HDF5 file. Working with HDF5 files is easy using pd.read_hdf(), allowing Python data analysis of complicated datasets.

Pandas' pd.read_parquet() method reads Parquet files into DataFrames. The columnar file format Parquet is developed for large data processing and can handle massive datasets. Use pd.read_parquet() to easily import Parquet data into Python for analysis and modification. The function takes the Parquet file location as its primary parameter and produces a DataFrame containing the file's data. Add extra options to change import. Manage data types, filter rows, and choose columns to read. The pd.read_parquet() tool simplifies Parquet file processing, integrating columnar data into Python data analysis workflows.

Here's the code for all the above file access methods:

Viewing data

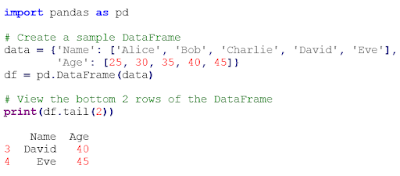

Pandas' head() and tail() procedures display a DataFrame's top and bottom rows.

head() obtains the top n rows of the DataFrame when n is supplied (n=5, by default). This method speeds up DataFrame content and structure inspection, especially for large datasets. It helps users understand column names, values, and data structure. Executing df.head(10) retrieves the top 10 rows of the DataFrame df. Head() defaults to the top 5 rows without an argument.

tail() - tail() delivers the last n rows of the DataFrame for an argument, n, which defaults to 5. As the collection winds down, the last few rows of the DataFrame might reveal patterns and trends. Tail(), like head(), helps users quickly analyze the DataFrame's bottom rows and understand the data's conclusion. For instance, df.tail(8) returns the DataFrame's last 8 rows.

In conclusion, head() and tail() are essential pandas operations for quickly examining a DataFrame's beginning and end. Often used for understanding and data exploration.

DataFrame.index and DataFrame.columns show row and column labels in pandas.

The DataFrame.index property returns an Index object with the row labels. This Index object stores DataFrame row labels and may be used to get or edit them. It is especially useful for row recognition and label-based exercises. Df.index returns row labels for the DataFrame df.

This demonstrates that the DataFrame df default index has a step size of 1 and ranges from 0 to 3 (exclusive). The row labels are represented by a RangeIndex object, a unique integer-based index. Each row receives an integer index number that starts at 0 and grows by 1.

Columns in DataFrame The Index object returned by DataFrame.columns represent the column labels. This Index object stores DataFrame column labels and may be used to obtain or edit them. Column labels aid column identification and functioning. A DataFrame's column labels are returned by df.columns.

The DataFrame df's 'Name' and 'Age' columns are labeled. Strings often employ object data types, thus dtype='object' implies that index elements do. An Index object with the DataFrame's column names represents the column labels.

Finally, DataFrame.index and DataFrame.columns allow users to change a DataFrame depending on its row and column names.

describe() - Pandas' describe() function provides short statistical summary measures for numerical columns in a DataFrame, exposing data distribution, dispersion, and central tendency. The describe() method shows counts, means, standard deviations, minimums, maximums, and percentiles (25th, 50th, and 75th) for each numerical column which is present in the dataset. We use the describe() method mainly to show us the exploratory data analysis (EDA) to quickly assess the dataset's range and variability, identify outliers, and understand the data distribution.

Look at the code to understand:

When this code is executed, the DataFrame df's numerical columns 'Age' and 'Salary' will exhibit statistical summary measures that summarize their distribution and qualities.

Sort_index DataFrame Pandas sort_index() sorts DataFrames by index labels along the given axis. It may sort the DataFrame's rows by index labels in descending or ascending order depending on parameters. This method sorts the DataFrame by default along the row index (axis=0), but it may also sort along the column index (axis=1). This function sorts DataFrame rows by index labels to make them easier to discover and analyze.

Look at the code.:

This code sample uses sort_index() to sort DataFrame df rows in ascending order by index labels. The sorted_df DataFrame displays rows by index labels.

Sort_values DataFrame The `sort_values()` method in Pandas allows users to sort DataFrame rows by column values. Data exploration and analysis are simplified by sorting data by column values. By categorizing data by criteria, this strategy helps find patterns, trends, and outliers. The option to sort by several columns lets users modify sorting behavior for analytical needs.

See an example of code:

To sort the DataFrame `df`, use the `sort_values()` method to sort the 'Age' column values in ascending order. After sorting, the DataFrame `sorted_df` displays rows organized by their 'Age' values.

At DataFrame() Pandas' at() method uses label-based indexing to quickly retrieve a single DataFrame value. It provides row and column labels for scalar values and is useful for obtaining specific elements in huge collections. This approach performs better than loc[] and iloc[] for retrieving a single value. At() returns the value at the row-column intersection given the row and column labels.

Look at the code:

This code sample uses at() to retrieve DataFrame df row 'B' and column 'Age'. Prints the obtained value to the console.

DataFrame.iat() - Similar to pandas' at() method, iat() enables integer-based indexing instead of label-based indexing. This technique retrieves a single DataFrame value using integer row and column coordinates quickly and efficiently. This method is useful for extracting specific components from large databases. The integer row and column locations are its arguments. When provided row and column coordinates, Iat() returns the DataFrame intersection value.

Look at the code:

This code sample uses iat() to retrieve the second row and second column (index locations 1, 1) of the DataFrame df.console will display the retrieved value.

Boolean indexing

Boolean indexing filters DataFrame data in pandas by preset criteria. It involves creating arrays of boolean masks to determine if each DataFrame element meets a condition. These boolean masks select DataFrame rows or columns with True booleans. This approach lets users extract data subsets that meet specific criteria precisely and flexibly. Boolean indexing aids DataFrame filtering, querying, and conditional operations.

Look at the code:

This code snippet filters DataFrame df rows with 'Age' columns over 30 using a boolean mask. After that, the boolean mask and Boolean indexing filter the DataFrame to only contain rows that fulfill the requirement.

isin() - Pandas' isin() method may filter DataFrame data by whether a column matches a list or array. It returns a boolean mask showing which column elements are in the values. This method is useful for filtering DataFrame rows by values or criteria. Using a list of values provided by the isin() method, users may rapidly find rows that meet category or threshold conditions.

Look at the code:

This code snippet filters DataFrame df rows when the 'City' column matches any cities_to_filter value using isin(). The DataFrame filtered_df only has rows with "New York" or "Chicago" in the "City" column.

Setting

In pandas, "setting" means modifying DataFrame or Series values. It lets users change rows, columns, and other dataset components for data transformation and manipulation. Setting can add rows and columns to the DataFrame or update existing data. Pandas have other data-setting functions and techniques besides direct assignment. This loc [], iloc[], and at[] provide label- or location-based data access and update. Pandas allows users to clean, transform, and change datasets, making it essential for data analysis and preparation operations.

Look at the code:

The code snippet changes the 'Age' column using at[] and creates a new column 'City' with default values using direct assignment. The setting applies to DataFrame df. DataFrames represent dataset changes.

Where: Pandas where() replaces and conditionally filters DataFrame or Series values. It enables users to establish a criterion and substitute a filler value for non-matching data. This function is useful for replacing or filtering components without affecting the data structure. When where() values do not fit the condition, they are replaced with the given filler value or NaN (by default), returning a DataFrame or Series with the same structure.

Write code for this:

This code sample uses where() to replace values in the DataFrame df's 'A' column if 'A > 1' is not satisfied. rows with true conditions maintain their values; otherwise, -1 is used to indicate values that do not meet the criteria. The DataFrame filtered_df shows where()'s conditional replacement.

Reindexing

Pandas reindexe DataFrames and Series by modifying their index labels or column names to match new labels. It is often used to align data structures with index labels, rearrange data in a specified order, and replace missing values with default or custom fill values. Reindexing allows flexible data modification and alignment, ensuring consistency and compatibility across datasets or analyses. The pandas reindex() method returns a DataFrame or Series with the index labels or column names and optionally handles missing values.

Let’s look at the code:

According to the new_index list, this code snippet updates DataFrame df index labels using reindex(). DataFrame reindexed_df contains the original data but rearranged index labels to match the order. The original DataFrame fills missing labels with NaN values.

Missing data

Missing data in a DataFrame or Series is displayed as NaN in pandas. Data analysis and preparation must handle missing data to ensure analytical output correctness. Pandas handles missing data using imputation, removal, and detection. Users can utilize isna() and isnull() to find missing data and drop or fill them with predetermined values or statistical measurements. Missing data management ensures that modeling and data analysis are done on complete and reliable datasets, reducing bias and mistakes.

View an example code for handling missing data:

This code example handles missing data using pandas. The isna() function first finds missing data in the DataFrame df. Next, dropna() removes missing values, creating cleaned_df, a DataFrame. Fillna() fills missing data with 0, creating a DataFrame filled_df with the fill value. These techniques demonstrate normal pandas missing data handling.

Basic mathematical operations and statistical operations

Pandas makes DataFrame column mathematical operations easy, enabling data manipulation and analysis. Addition, subtraction, multiplication, and division can be done on complete columns or sections of data. Pandas automatically align data according to index labels during these operations, ensuring output correctness and consistency. When two DataFrame columns are added, the new column's elements equal the sum of the original columns' components. Subtracting, multiplying, and dividing columns is done element-by-element in aligned data.

Pandas can generate aggregate statistics for DataFrame columns using mathematical methods like sum(), mean(), max(), and min(). These approaches let users rapidly build common numerical data summary metrics to understand the dataset's features and distribution. Pandas lets users modify DataFrame computations and transformations by applying mathematical functions element-wise using apply() and applymap(). Overall, pandas' comprehensive support for mathematical operations and functions lets users quickly and successfully do data analysis.

This code shows basic mathematical operations and aggregate statistic computations on a pandas DataFrame. A DataFrame starts with two number columns, "A" and "B." After that, the DataFrame gets four further columns ('C' through 'F') that display the results of mathematical operations (addition, subtraction, multiplication, and division) on the initial columns. Built-in pandas routines create aggregate statistics such as column totals, means, maximums, and minimums. We conclude with aggregate statistics and the revised DataFrame. This code shows how pandas' comprehensive capabilities and user-friendly interface simplify data manipulation and analysis.

About advanced mathematical operations and statical operations

Pandas support complex calculations and transformations for DataFrame column math. These procedures are needed for statistical modeling, machine learning, and time series analysis.

Pandas support complicated mathematical operations because of their interaction with NumPy, SciPy, and other libraries. Users may apply NumPy's array-based operations on DataFrame columns, including mathematical functions, element-wise operations, and linear algebra. Pandas also offers Fourier transformations, rolling calculations, correlation analysis, and interpolation routines.

The ability to generate aggregate statistics over a defined window of data points using rolling mean, sum, or standard deviation is notable. These procedures are useful for time series data analysis and moving average oscillation smoothing.

Pandas also calculate descriptive statistics, hypothesis tests, and correlations. Users may produce summary statistics, confidence intervals, p-values, and correlation matrices to understand data distribution, linkages, and relevance.

Overall, pandas' wide support for sophisticated mathematical operations makes it a useful tool for quantitative analysis and scientific computing since it speeds up data processing and modeling.

Look at code for advanced mathematical and statical procedures:

The code shows advanced mathematical operations on a Pandas DataFrame. First, a DataFrame with random numbers is created. Rolling calculations determine the rolling mean and standard deviation across 10 data points. Rolling calculations can reveal patterns and smooth out inconsistencies in time series data. A correlation matrix shows variable relationships by analyzing column linkages. Linear interpolation fills missing DataFrame values to ensure data continuity. The DataFrame and generated statistics illustrate how pandas can efficiently and successfully enable complicated data analysis.

User define functions

Users can build custom functions to change DataFrame rows or columns using pandas' user-defined functions. Encapsulating complex logic or computations, these functions make data analysis workflows flexible and reusable. Pandas users write Python functions that take DataFrame columns or rows as input parameters using the def keyword. Functions return results after performing operations or transformations. Once developed, the apply() method may apply the custom function to DataFrame columns to analyze large datasets rapidly and with minimum code.

Let’s look at the code:

This sample code uses a user-defined function named calculate_sum() to add two input values. Apply() applies the function to DataFrame columns 'A' and 'B' along the rows (axis=1). A new column, "Sum," stores the row sums. This example explains how to use user-defined functions to enhance pandas and perform custom data manipulations.

AggDataFrame() - Pandas DataFrame.agg() function applies one or more aggregation techniques to a DataFrame's columns. It computes mean, median, total, standard deviation, and other summary statistics simultaneously. The agg() function accepts a list or dictionary of aggregating functions to apply independently to each column or a single function. Users may simply generate statistics for different DataFrame columns due to its versatility. Custom aggregating functions can be sent to the agg() method as lambda functions or user-defined functions, giving data summarizing even more customization options.

Let's examine the Python code:

This example calculates the sum, mean, and median for each DataFrame df column using agg(). The DataFrame agg_result displays aggregated statistics as columns with the original column names as index labels. This shows how DataFrame.agg() speeds up pandas summary statistics computation.

Transform DataFrame Pandas' DataFrame.transform() method lets you apply a function to each DataFrame group independently. It lets users change DataFrame columns group-wise without changing the structure. The transform() method takes a function or callable object to process each batch of data. This method should return a changed duplicate of the supplied data in its original form. The updated data is aligned with the original DataFrame using index labels to ensure output consistency. This capability helps with custom data transformations, normalization, and standardization in data groups.

Look at the sample code to understand:

This example defines the standardize_values() function to standardize category group values. The convert() method groups the 'Value' column by the 'Category' column. The changed values are in a new column named "Standardized_Value" that aligns with the original DataFrame by index labels. This shows how DataFrame.transform() simplifies group-wise data transformations in pandas, speeding up data analysis.

Value counts

DataFrame column values are counted using pandas value_counts(). Unique column values are returned as an index and their counts as a series. This function helps find the most common values and understand category variable distribution. By default, the Series is sorted with the most common value at the top and in descending order. Additionally, value_counts() has parameters like normalize, which finds the relative frequencies of unique values, and dropna, which eliminates missing values from the count.

Check out value_counts() example code:

The 'Category' column in the DataFrame `df` is used as input for the `value_counts()` function, which counts the occurrences of each value. Unique values are used as the index for the Series, while their counts are represented in the `value_counts_result` Series. Printing this Series shows the frequency of each category in the 'Category' column, revealing the dataset's category distribution.

String Methods

Pandas have powerful string methods for managing and analyzing string data in DataFrame columns. These methods allow DataFrame column string concatenation, splitting, searching, replacement, and formatting. Substrings can be replaced with other strings with str.replace(), while str.lower() and str.upper() convert strings to lowercase and uppercase, respectively. Str.split() also splits strings by delimiters. Users may easily manipulate string data in DataFrame columns using the str accessor. Pandas' string operations capability lets users manage and analyze textual data in DataFrame structures.

Several string operations are applied to the DataFrame df's 'Name' column. The str.split() method splits names by whitespace. The first names are collected using str.get(). The str.upper() method converts all names to uppercase, while str.replace() changes "Brown" to "Green". These procedures show how pandas' string methods efficiently handle and analyze string data in DataFrame columns.

Merge

Pandas' merge() method merges DataFrames with common columns or indexes. It uses database-like inner, left, right, and outer joins to merge DataFrames. Users can select columns or indexes to join using the on parameter. The left_on and right_on options allow join operations on common column names in DataFrames with different column names. It can also be "inner," "outer," "left," or "right," using the way option. Both DataFrames' columns are aligned using the join keys, and the merged DataFrame includes rows that meet the join requirements.

Look at the Python code:

This code produces two DataFrames, left_df and right_df, with 'ID' as their common column. The combined DataFrame merged_df only contains rows in which the two DataFrames' 'ID' values match after an inner join using the merge() method. Aligned by 'ID', the final DataFrame contains columns from both left_df and right_df. The merge() method simplifies combining DataFrames with shared keys or indices, making it easier to integrate and analyze linked datasets.

Pandas' concat() method concatenates two or more DataFrames along one axis. Data integration and manipulation are flexible whether joining DataFrames horizontally (columns) or vertically (rows). Users can concatenate DataFrames vertically by stacking their rows with axis=0. However, the axis=1 option concatenates DataFrames horizontally, aligning their columns. To manage duplicate indices and missing data, the concat() method offers several arguments, allowing users to customize the concatenation process. This tool helps stack datasets with complimentary information or integrate datasets with similar architecture.

Example DataFrames df1 and df2 have the same column names, 'A' and 'B'. The concat() function concatenates the DataFrames vertically along the rows (axis=0), creating a concatenated_df with rows stacked from df1 and df2. The concat() technique simplifies data integration and manipulation in pandas by combining DataFrames along given axes.

join() - Pandas' join() method combines two DataFrames based on their index labels or columns. It allows DataFrames to be joined like databases by aligning their index labels or columns. To join, individuals can choose "inner," "outer," "left," or "right." By default, the join() method does a left join, maintaining all rows from the left DataFrame and combining rows from both according to index labels or columns. Users can change join behavior by using the on option to join DataFrames on a column or the left_on and right_on parameters for DataFrames with different column names. This method simplifies merging DataFrames with comparable index labels or columns, making it useful for integrating relevant datasets.

Look at the code:

Left_df and right_df are two DataFrames with the field 'ID' as their common column. A left join using the join() method maintains all rows from left_df and merges data from both DataFrames by 'ID'. The DataFrame joined_df contains collagen from both left_df and right_df, aligned by 'ID'. This shows how the join() function in pandas facilitates data integration by joining DataFrames with shared index labels or columns.

Grouping/Group by